Home Entertainment Blog Archive

Brought to you by your friendly, opinionated, Home Entertainment and Technology writer, Stephen DawsonHere I report, discuss, whinge or argue on matters related to high fidelity, home entertainment equipment and the discs and signals that feed them. Since this Blog is hand-coded (I like TextPad), there are no comments facilities. But feel free to email me at scdawson [at] hifi-writer.com. I will try to respond, either personally or by posting here emails I consider of interest. I shall assume that emails sent to me here can be freely posted by me unless you state otherwise.

This archive is for an uncertain period commencing Thursday, 11 April 2007

In my Canberra Times column this week I discussed 1080p24 as the ideal delivery standard for Blu-ray and HD DVD. Today I received an email on this, thus:

I had never heard of 1080p24 or even conceived that it might be possible.Flicker is not a problem with modern digital displays. Flicker occurs on scanning displays, like CRTs, because they rely on the persistance of output from the phosphors on the screen after the scanning electron beam has passed. A slow frame rate leaves too long an interval before any given pixel is refreshed, so you get flicker. Digital displays hold all the signal data in memory until a full frame has been collected, and then switch this instantly to the display. This is then held on the display while the memory fills up with the next frame, and then this is shown.But how on earth does it avoid flicker, the bane of all TVs and early computer displays? And just what kind of display device do you need to display 24 fps? Presumably the answer has a lot to do with how flat panel devices can be made to work.

Whether any given frame has to be held for 1/24, 1/50 or 1/60 of a second doesn't matter, because there is no fading between frames.

I used to run my old computer CRT monitor at 85 hertz (the highest it was capable of at the resolution I was using) to avoid flicker. My new LCD monitor only runs at 60 hertz max, but there is no flicker at all. It could probably run at 10 hertz without flicker, if it were so specced, and still meet my needs, except for DVD playback.

Today I embarked upon a begging mission. I wrote to eight different purveyors of projectors and asked if they'd like to lend me one for at least several months. Odd behaviour? Here's why.

I shall henceforth be writing four HD DVD or Blu-ray disc reviews for each edition of 'Sound and Image' magazine. The focus will be on the technical quality of the titles, rather than the attractiveness of the content. But there's a problem.

To do a really good job I need a HD DVD player, a Blu-ray disc player and a true high definition (1,920 by 1,080) projector. None of which I can afford to purchase. I have already successfully prevailed upon Pioneer to lend me its new BDP-LX70 BD player (1080p24 output, networking capability) as soon as it comes in. I am working on a HD DVD player. And today I started working on a projector. Thus the eight emails. I have reviewed all but one of the projectors I enquired about, and the other one I will be reviewing within the fortnight.

To be honest, I wasn't all that hopeful. But within a couple of hours Epson had come back to me offering to lend me the EMP-TW1000 projector for four months. Brilliant!

But I did have one concern to settle before I said 'yes'. While I reviewed it several months ago, at the time I did not have access to a 1080p24 source device, so I couldn't be certain it could handle that preferred signal standard. So I decided to google up a few reviews.

I eventually found a decent review at Projector Central (apparently they use a different model name in the US). And this confirmed that the projector does, indeed, handle 1080p24 signals. So I said 'yes' to Epson, and that's the projector I'll be using for these movie reviews.

But along the way I found a wonderful example of how not to review product. I commend it to your attention. We have here a home theatre projector, full high definition, for review, and the reviewer plugs it into a ... laptop! The opinion is expressed that '[a]ny white wall is fine for projection' (oh yeah, a high gloss one?) and that Screen Goo 'is highly reflective', as though that's some kind of virtue. A mirror is highly reflective, but doesn't make much of a projection screen because it burns holes in your retinas. A projection screen reflects nearly all (preferably all) the incident light, but in a diffuse manner. I imagine Screen Goo does this as well.

And the real killer:

Obviously, for maximum clarity it is best not to set up the projector in an extremely bright room. For testing purposes, however, I did, and though the colours weren't as vivid it still looked fine.Huh? One of the selling points of this projector is a claimed contrast ratio of 12,000:1 thanks to the dynamic iris. That's totally wasted with any ambient light in the room at all.

I've been reviewing a lot of subwoofers lately and for almost all of them the manufacturer quotes two power specifications for their built-in amplifiers: the continuous output power (sometimes erroneously labelled 'RMS'), and 'dynamic peak' or similar words. The latter is typically double or more of the former. Some claim that this is a truer representation of what a subwoofer amplifier will deliver in the usual course of business. This is a reasonable argument, or would be if we had some sense of how it was actually measured, and whether it is measured the same across the industry.

Unfortunately none of the manuals actually gave this information. Except, tonight I'm looking at a JBL sub/sat system and its manual actually describes the measurement technique. I quote:

The Peak Dynamic Power is measured by recording the highest center-to-peak voltage measured across the output of a resistive load equal to the minimum impedance of the transducer, using a 50Hz sine wave burst, 3 cycles on, 17 cycles off.A part of this is reasonable. The 3-on, 17-off bursts give the power supply capacitors a chance to charge up again and may well come close to something like real-world conditions.

But what's this about 'center-to-peak'? When you're doing power output measurements, you do indeed measure the voltage, and the centre to peak is the easiest to measure on a CRO. You couldn't really use an RMS voltmeter because the offs would be averaged with the ons.

But if you use a centre to peak measurement, you then divide by the square root of two, or multiply by sin(45°), which is the same thing, because for a clean sine wave this gives you the RMS value of the voltage. Omitting this step means overstating the measurement by 41%.

Then there is the matter of choosing the lowest impedance in the loudspeaker's operating band (I assume they're talking about the operating band!). To calculate power from voltage you square the voltage and divide it by the load resistance (let's forget about the impedance, which complicates matters). Let's say the average resistance of the driver across its operating frequencies is 8 ohms. It would not be unusual for the driver to drop to 4 ohms at some frequency or other. By using this 4 ohms value you are increasing the power figure significantly (not doubling it, because the maximum undistorted voltage is generally lower into a lower impedance than it is into a higher impedance, but the drop-off is not proportional to the reduction in impedance so there is still some further increase.)

I hasten to add that I am not chastising JBL here. In fact, I am congratulating it for its openness about this, and I wish that other companies would follow its example. But I do doubt that this measurement actually yields much more useful information than continuous power output.

I rarely toss out old stuff that I've written. I have on the next to me, for example, photocopies of all my police notebooks from 1979 to 1985, and just about everything else I've written since. The same is true in the computer age. I still have the very first article I wrote for publication back in 1992.

On Sunday I was looking for an spreadsheet I did a few years ago which lists the wavelengths, and quarter and half wavelengths, for different audio frequencies at one third octave intervals. I was doing a piece on optimising subwoofers and wanted to make a point about constructive and destructive interference.

In searching for that, I came across a small spreadsheet I did back in October 2002 in which I was comparing the prices for flash memory cards in a range of sizes and formats. The prices were derived from the web page of one of the then better for value online merchants. This is the table:

| Size | Compact flash | SmartMedia | Memory Stick | SD |

|---|---|---|---|---|

| 16MB | $26 | |||

| 32MB | $51 | $41 | $81 | $71 |

| 64MB | $73 | $72 | $116 | $113 |

| 128MB | $142 | $149 | $209 | $208 |

| 256MB | $277 | $449 | ||

| 512MB | $602 | |||

| 1024MB | $1789 |

So here it is, May 2007 or less than five years later. Today I receive some flash memory cards I ordered online last week. The largest of them was the new SDHC (Secure Digital High Capacity) format. It is physically the same as SD, but breaching the 2GB barrier required changes to the logic, so most SD devices won't support it (although my Pentax K100D camera will). The capacity of this card is 4GB. Cost: $89.95. And that includes a small USB card reader.

In other words, even though it is 64 times the size, it costs less than a 64MB SD card back in 2002. It's beginning to look like flash card based digital video cameras are going to be the way of the future.

In my reviews I try pretty hard to find all the defects in a product that could reduce user convenience or performance, but, of course, I frequently miss stuff.

Last night I was playing a CD of The Who's Tommy, and things were very peculiar with the sound. The kick drum seemed to be quite out of time with the rest of the music, and there seemed to be a mid-bass lumpiness that shouldn't have been there. I was using small Krix speakers and a Krix subwoofer, and in my experience Krix stuff is pretty good. So what could be going on?

I plugged in a pair of headphones and the bass kick seemed back in place, so it wasn't something weird on the CD that I had previously failed to notice. Maybe, I thought, I had the distance setting on the subwoofer in the home theatre receiver set too high. I even started to adjust it (I normally put it at 3.6 metres, compared to the 2.7 metres for the front speakers). Then I realised that at a delay of just 3 milliseconds per metre, this really wasn't going to make much difference.

But this did get me thinking along the right lines. A week or so ago I was using some video gear that seemed to insert a significant delay to the video, to the point where there were lip sync issues with some material. I'm not especially sensitive to lip sync issues, so it must have been marked for it to be disturbing to me.

The receiver I was using was one of the first generation to incorporate a group audio delay circuit. What that does is insert a user-selectable amount of delay into the signal to allow you to bring the sound back into alignment with the video. I had found an 80 millisecond delay did the trick nicely.

But, it turns out, this was operating weirdly. I have my own front speakers set to 'large' in the receiver's setup, but the Krix speakers needed to be set to 'small'. That caused the receiver to extract the bass, as it should, and send it to the subwoofer instead. But it seems that the group delay does not operate on the bass sent to the subwoofer, so it was leading the rest of the sound by 80 milliseconds, or nearly a tenth of a second. I zeroed out the group delay and everything sounded as it ought to (including eliminating the mid-bass lumpiness).

Clearly this is something I shall have to check with future receivers.

I mentioned below that 'what we really need is a HD TV receiver that can either output 576i over a HDMI output (DVI outputs do a weird output when set to 576i, which isn't compatible with all the displays I've tested), or incorporates high quality video processing that checks the interlaced status of the actual video, and then weaves progressive source material.'

I've had a Topfield TF7000PVRt sitting around here for several months. There were significant problems with it when I first received it (a few weeks prior to it becoming available for sale), mostly to do with some of the advertised functions not working. Anyway, I supplied a long list of problems to Topfield over a period of several weeks, then I put it on the backburner while I waited for them to fix it. Today, in an endeavour to avoid doing real work, I plugged the Topfield back in, downloaded the latest firmware from the Topfield site, and updated the unit's firmware. I'm happy to report that many of the issues I had raised have been fixed. Most importantly, the 'Auto' resolution setting now works, including over HDMI, and it is possible that some HDMI compatibility issues have been eased. When I plugged in the TF7000PVRt this afternoon, I couldn't get the DVDO iScan VP50 to accept its HDMI output. I switched over to component video, upgraded the firmware, and then the VP50 was happy with it via HDMI at all resolutions.

What's most important is that now that the TF7000PVRt has 'Auto', when you switch from a 1080i station to a 576i station, then the output also changes to match. The relevance? Well, I haven't checked the TF7000PVRt's scaling and deinterlacing capabilities yet, but with the ability to deliver 576i, you can take advantage of video processors not blessed with the inclusion of a VP50-like Prep function. The Mitsubishi HC5000 HD projector, for example, uses the Silicon Optix HQV processor. It can't un-deinterlace the mess that may have been made with the source device, but it does do a fine job with 576i input.

In other words, while most of us would benefit from from having a VP50, few of us can afford it. But with the Topfield TF7000PVRt, and a display device with decent quality processing, very good results can be achieved.

Below, my blog entry 'We need a videophile's High Definition Digital TV Receiver' puts the case that we are not getting all the picture we should be with standard definition digital TV, thanks to what I suspected was poor deinterlacing in the receiver.

Last night I found a way to test this directly, thanks to some advice from Marcelo, a very helpful participant on the DBA forums.

I've been testing out the DVDO iScan VP50 video processor. In fact, I'm about to write it up. This entry isn't about the VP50 particularly, other than to note that this is about the most powerful bit of home entertainment kit I've ever seen. Instead, it is about something that VP50 revealed.

One of the VP50's many excellent features is something called 'Prep'. You can select this when the processor is being fed a progressive NTSC or PAL signal (ie. 480p or 576p). Prep causes the VP50 to un-deinterlace the video, restoring it to its original 480i or 576i.

Why? Because many source devices do a very poor job of deinterlacing. By restoring the signal to its original condition, the VP50 can then apply its own high quality deinterlacing.

So here's what I did. The other night I recorded the Cricket World Cup final on a Strong SRT-5490. Watching cricket has been a uniformly poor experience over the years, especially on a big screen. Quite aside from any deinterlacing problems, the multiple MPEG2 encoding/decoding sequences between the cricket ground and my home leave significant compression artefacts visible.

Last night I played this back and fiddled with the various processing options. I was using a BenQ 1080p DLP projector to view the picture, with its aspect ratio set to 'Real' (this invokes 1:1 pixel mapping) and the output of the of the VP50 set to 1080p. The Strong does not have an automatic video output mode, in which the output resolution matches that of the signal. So I normally leave it set to 1080i output, so as to get the best picture with the HD channels.

I focused my camera on the 'WIN SPORT' logo at the top right hand of the screen and took three photos of this. The first was with the Strong outputting 1080i. The second was with the output of the Strong set to 576p, and with the VP50 'prepping' the signal back to 576i, and then re-deinterlacing it properly. The third was with the output of the Strong set to 576p, but with 'Prep' switched off in the VP50. The picture to the right shows the result of this.

I focused my camera on the 'WIN SPORT' logo at the top right hand of the screen and took three photos of this. The first was with the Strong outputting 1080i. The second was with the output of the Strong set to 576p, and with the VP50 'prepping' the signal back to 576i, and then re-deinterlacing it properly. The third was with the output of the Strong set to 576p, but with 'Prep' switched off in the VP50. The picture to the right shows the result of this.

I can say that these photos accurately represent what I saw on the screen. They aren't just artefacts of a snapshot. Let us start with the bottom one: 576p output from the Strong, no processing (other than scaling) by the VP50. As you can see, the letters are quite fuzzy. That is because the Strong (and most other devices, other than those with high quality processors from Faroudja or Silicon Optix) deinterlace material by showing in sequence each of the two fields that make up the frame. That is, one 576 line frame is delivered by first showing the 288 lines of one of the fields (the other 288 lines are filled in by interpolation) while the other 288 lines are shown a fiftieth of a second later. This manifests as fuzziness (largely due to the interpolation) and loss of fine detail (see the cross-bar of the 'T').

This process is called 'bobbing', and it isn't too bad. But a better process is employed by the likes of Faroudja and Silicon Optix and, of course, DVDO. This goes under various names, but the essence of how it works is this: the processor identifies which bits of the picture are actually moving by comparing the two frames. It still bobs those parts, but any parts of the picture which are stable it 'weaves' together, as though it were a progressive scan picture. Since the 'WIN SPORT' logo wasn't moving, the VP50 rendered it at full resolution.

So what? you ask. After all, that 'SPORT' bit shown on this pages is just one hundred display pixels wide ... out of 1,920! The actual cricketers were still bobbed.

Well, there are two ways in which this makes an improvement. In much interlaced TV there is enough stable detail, which is sharpened up by this processing, to make a real difference to how sharp the overall picture appears. It is much, much more restful on the eyes.

The second way concerns material which is not interlaced, such as most drama, movies and so forth. The Strong bobs all of this as well (I confirmed that this afternoon, noticing bobbing artefacts on a film I had recorded on the Strong). But the VP50, with Prep switched on, sharpened up the picture slightly, and eliminated all those artefacts.

What have I forgotten? Oh, that's right: the top part of the picture! You know, the one delivered by the Strong at 1080i. Well, all that shows is that the Strong's scaler from 576i to 1080i is total crap.

This is something that I will be paying very close attention to in the future.

Over the past couple of months, I've noticed that Canberra's Southern Cross Ten TV station is doing something rather strange. In the usually black space of a few seconds between the end of a block of advertisements and the recommencement of a TV program, it has been inserting eight to twelve dark -- but not blank -- frames. These show a very darkened few frames from an advertisement. Sometimes it is a commercial for some product, sometimes it is part of one of the station's self-promotional logos.

Over the past couple of months, I've noticed that Canberra's Southern Cross Ten TV station is doing something rather strange. In the usually black space of a few seconds between the end of a block of advertisements and the recommencement of a TV program, it has been inserting eight to twelve dark -- but not blank -- frames. These show a very darkened few frames from an advertisement. Sometimes it is a commercial for some product, sometimes it is part of one of the station's self-promotional logos.

The picture to the right is an example of one of these. It initially looked like a black box, but that was due to my eyes washing out the dark detail because of the slab of white surrounding the picture. So I've changed the colours for this entry. Stare at the box for a while. If that still doesn't work, copy it to your favourite picture editor, pump up the brightness and you will clearly see a 'Red Rooster' ad. Analysed with reference to a CMYK colour space, 'K' never falls below 90% (K represents black).

I wonder if this is intentional, so some weird technical bug has intruded.

The issue before last of Sound and Image magazine carries my article comparing the picture quality of SDTV and HDTV. In brief, I captured snippets of both versions of the same programs on a high definition PVR (the Strong SRT-5490), downloaded them to my computer, then took a number of matching frames for the comparison.

The article came out quite nicely, I thought, but in the translation of the stills to the printed page, I thought the differences weren't quite as clear as they were on my computer screen. With my similar article in the current issue, with Blu-ray vs DVD, the differences in the comparison shots are a lot clearer.

Anyway, I've placed the whole article, complete with the comparison photos, here: Standard Definition TV vs High Definition TV: Is the latter really better than the former?

UPDATE (Saturday, 28 April 2007, 11:49 pm): I've also improved and uploaded an article I did for The Canberra Times on the 576p standard of 'HDTV': 'When HDTV is not HDTV: 576p is not high definition'

I've been struggling to get my head around this for some time. The problem has been a lack of replicable test signals. But I think I've got it nailed, at last.

I was watching a late night movie on ABC TV recently. Normally these are old black and white things, but this one happened to be in colour. It wasn't particularly good. This was on digital TV, and I was using a Strong SRT-5490 HD PVR. This was feeding via its DVI output to the HDMI input on a BenQ 1080p DLP projector. But not directly. The signal was going via a DVDO iScan VP50, one of the best video processors in the world.

The output of the Strong was set to 1080i, and the VP50 was converting this to 1080p.

As I was watching, I noticed that there was a moire pattern on the fine texture of the necktie of one of the characters. So I recorded a snippet of the video.

As I was watching, I noticed that there was a moire pattern on the fine texture of the necktie of one of the characters. So I recorded a snippet of the video.

Many of these late night movies are of appalling quality, primarily because the video appears to have been derived from an NTSC source, and converted to PAL.

You can convert NTSC to PAL by doing some heavy processing: reversing the 3:2 pulldown so that you end up with the original film frames, and then recompiling them into 25 frames per second PAL. But these movies are rarely like that. Instead, they are converted to PAL directly from NTSC, with most of the frames showing heavy interlacing. That is dealt with by video processors by eliminating the second field -- at least for moving parts of the picture for high quality processors such as the VP50 -- further softening what is already pretty fuzzy video.

But this particular movie didn't suffer from that problem. It appeared to have been telecined from the original film, and so was actually progressive in nature. I downloaded the short recording onto my computer, and examined the video closely. Yes, it was definitely progressive.

At this point, I would suggest that you read my article on problems with DVD player deinterlacing, especially the section headed 'Deinterlacing video-sourced content'. As you will see from this, a moire effect often happens when fine patterns on progressive source video are inappropriately deinterlaced using the 'bobbing' method. That's what seemed to be happening in this case.

The ABC TV program was broadcast as 576i. The procedure for converting 576i video to 1080i is to first convert it to 576p (ie. deinterlace it) and then scale it up to 1080. I'm fairly confident that the Strong SRT-5490 just bobs all 576i material on the way to upscaling it to 1080i. I strongly suspect that this is also done by all HD TV receivers on the Australian market. I shall confirm this over time.

So what we really need is a HD TV receiver that can either output 576i over a HDMI output (DVI outputs do a weird output when set to 576i, which isn't compatible with all the displays I've tested), or incorporates high quality video processing that checks the interlaced status of the actual video, and then weaves progressive source material.

UPDATE (Monday, 30 April 2007, 4:26 pm): Further on this, with real proof, in entry 'Proof of crappy video output from HDPVR'.

Last night I started working on a blog entry on this subject, but soon after I started I realised that I was putting in too much work to just give it away. So this is just a brief taste. Full details will be in a forthcoming issue of Sound and Image magazine.

Last night I started working on a blog entry on this subject, but soon after I started I realised that I was putting in too much work to just give it away. So this is just a brief taste. Full details will be in a forthcoming issue of Sound and Image magazine.

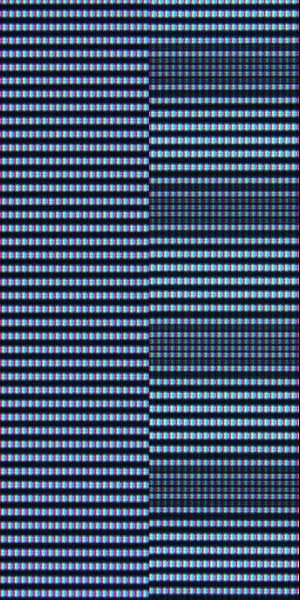

The picture to the right consists of details from two closeup photos I took last night of a 1080p LCD display. In both cases I was feeding a 1080p test signal from a DVDO iScan VP50 to the display. The test signal was alternating black and white lines for the entire 1080 line height of the picture.

The picture on the left shows the excellent result achieved when I enabled 1:1 pixel mapping in the display. The picture on the right was with the default setting of the display, which scaled up the picture slightly. Obviously one is far more accurate than the other.

In the article I have used a analogy of the 'beat', the cyclic volume variation when two notes are played which are very close to, but not quite exactly, matched. That led to a discussion with my editor of the differences and similarities between the auditory and visual worlds. Naturally I had some thoughts on the matter.

Both musical beats and the display example in the photos just come down to interference patterns. You could make the reverse analogy and say that a beat generated by two slightly mismatched tones is actually an aural moire pattern.

However in the visual field the effect is far less obvious than the musical field. It's amazing how much rescaling and conversion of video that can take place with remarkably little impact on the picture. Try to do to music the stuff that we do to video, and it would sound like crap.

I think its because of the differences between the ways our eyes and ears work. The latter do divide sound up into several frequency ranges, with different parts of the ear responding to different bands, but in the end it is all woven together and we get a seamless capture of up to ten octaves.

Our eyes only have a range of one octave, so that immediately rules out doing anything artistic with light in a way akin to music. It's the coincidence of harmonics that makes musical harmony work: the second harmonic of C is almost identical to the first harmonic of G -- there is just 0.89 hertz difference between them for Middle C and the G above it, and if you listen carefully you can hear the slow beat; and there is 1.18 hertz difference between the third harmonic of Middle C and the second harmonic of the F above it.

The other difference is that our eyes treat brightness and colour very differently. It is colour, of course, that is the equivalent of tone in sound. But we do not see a continuous spectrum of colour in the way that we hear a continuous spectrum of sounds. There are three colour bands, and all the light frequencies near to the centre point of one of those bands are lumped together and captured by just one of the three types of pigmented colour receptors in the eye.

What our brains tell us is a smooth range of colours is actually the results of mixing three levels of response from the three types of receptors to three bands of frequencies. In fact, you can take two sets of three frequencies, and if the levels are adjusted correctly, you can make them both look the same, although they would measure very differently with a suitable instrument.

I was watching ABC digital TV last night when I happened to notice that the home theatre receiver was indicating that the incoming digital audio was 5.0 channels, rather than the usual 2.0. To double check, I recorded a bit and transferred it over to my computer.

Remember, ABC TV is unusual in delivering two audio streams. There is an two channel MPEG2 stream at 256kbps, and a high bitrate Dolby Digital stream. This runs at 448kbps (the maximum provided on DVDs, although Dolby Digital actually supports up to 640kbps) and last time I checked was in two channel format.

Remember, ABC TV is unusual in delivering two audio streams. There is an two channel MPEG2 stream at 256kbps, and a high bitrate Dolby Digital stream. This runs at 448kbps (the maximum provided on DVDs, although Dolby Digital actually supports up to 640kbps) and last time I checked was in two channel format.

But now, I see, it is delivering 5.0 channels (same bitrate). You won't notice this unless you have made your set top box default to the Dolby Digital audio track, if available.

Of course, Dolby Digital 5.0 is merely a delivery system. Whether the sound will be surround or not depends entirely on what is packed into those five channels. The show I checked, for example, was a quite forgettable late night movie called The Wild and the Willing. Forgettable except that it was the debut of a number of fine British actors, including John Hurt.

The packing of the stream may be five channel, but of course the content was only mono.

There is one problem with this. I routinely have the input of my receiver to which the set top box is connected switched to Dolby Pro Logic II decoding, so as to extract any surround content that may be encoded into the two channel source. But this won't work on 5.0 (or 5.1) signals. I doubt that it will with any receivers. So for those shows, it's necessary to switch back to the MPEG2 audio.

UPDATE (Wednesday, 18 April 2007, 11:21 am): Well, that was short-lived. I had the ABC on again last night, and the Dolby Digital track was in 2.0 channels. They must be experimenting.

I was perusing, as I so often do, the Internet Movie Database when I stumbled upon something quite surprising. It was that the 1929 Disney animation, 'The Skeleton Dance', was banned in, of all places, Sweden. Clicking on the provided link, I discovered a whole bunch of startling bannings in Sweden (there are 229 in total, according to IMDB):

- Drum (1976), an arguably offensive sexploitation movie;

- A Kiss Before Dying (1956), an excellent thriller starring Robert Wagner and Joanne Woodward, based on the novel by Ira Levin (later known for Rosemary's Baby and The Boys from Brazil, The Stepford Wives and, one my favourites, This Perfect Day;

- Mad Max (1979), the famous Aussie flick that made Mel Gibson;

- Magnum Force (1973), a sequel to Dirty Harry

- The Man Who Knew Too Much (1934), Hitchcock's original version; and

- The Public Enemy (1931), a very important James Cagney movie.